Voice bots sometimes referred to as voice-activated assistants, are becoming absolutely essential for voice navigation, question-answering, and controlling smart homes, among other daily activities. However, managing languages and accents presents unique challenges. This blog explores strategies for voice bots to effectively handle linguistic diversity, such as Hexa, Alexa, and Siri, which are adept at understanding us regardless of our language or accent. The goal is to understand and respond to diverse speech patterns, ensuring seamless interaction with technology and enhancing the user experience.

Have you ever wondered how these voice bots manage to understand and respond to different languages and accents? Let’s delve into this fascinating world of multilingual voice bots.

The Magic Behind Voice Bots

Voice bots utilize two key components: automatic speech recognition (ASR) and natural language understanding (NLU) to enhance their understanding of languages and accents, demonstrating the interplay between these key technologies in the development of intelligent speech recognition systems.

Automatic Speech Recognition (ASR): This technology uses an analysis of your voice’s natural sounds to translate speech-into-text(STT). Deep learning models that are used for variations in pronunciation, pitch, and speed are used to match speech sounds and words. ASR is trained on massive datasets of speech samples, ensuring accurate and efficient transcription of speech into text.

Natural Language Understanding (NLU): Voice bots can now use methods like sentiment analysis, named entity recognition, and classification to conclude meaning from transcribed text cheers to this technology. This allows bots to decode the structure and context of phrases, enabling them to comprehend phrases beyond just words. When combined with ASR and NLU, voice bots are remarkably accurate in understanding a wide range of languages and accents.

Cracking the Code of Accents and Dialects

To teach voice bots like HEXA the fine details of various pronunciations, they are trained on a large amount of speech data with a variety of accents. They rely on ASR and NLU models to understand dialects by considering regional idioms, slang, and sentence structures. This Contextual awareness makes it easier for voice bots to understand meanings even when pronunciations differ.

The more language variations a voice bot is exposed to during training, the better it can recognize unique accents like English and Hindi. Many voice bots today are impressively multilingual, capable of conversing fluently in various languages. With the right speech data and smart NLU, voice bots can understand different English accents and even converse fluently in multiple languages like English, Hindi, Tamil, Telugu, Malayalam, and more.

Machine Learning: Voice bots are trained on vast datasets containing diverse speech patterns. Their ability to identify and comprehend various accents and dialects improves with greater exposure to them.

Optimization Techniques: These bots use computational linguistics to benchmark languages and accents against a database, improving their ability to recognize and process speech.

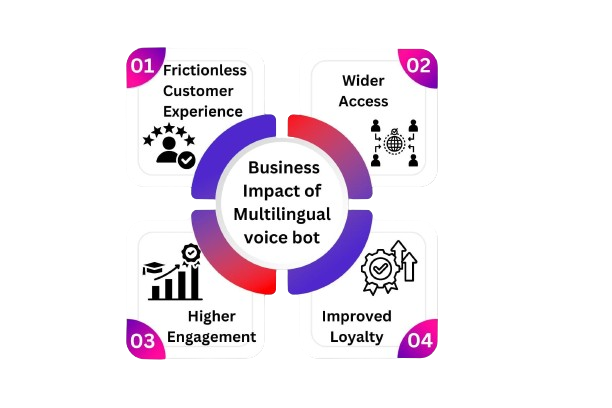

The Business Impact

Understanding languages and accents is not just a technological feat; it has significant implications for businesses. Here’s why:

Frictionless Customer Experience (CX): Users enjoy the smoother, more personalized conversations that are made possible when a voice bot can understand a customer with ease.

Wider Access: By removing linguistic and accent barriers, businesses can increase access and enhance the customer experience (CX) for a wider range of demographics.

Higher Engagement: Improved engagement is facilitated by intuitive voice interactions on phones, apps, and contact centers.

Improved Loyalty: Voice bots increase brand trust and loyalty by making customers feel valued and appreciated.

The future of AI multilingual voice bots

Voice bots use AI to understand natural language, even with different accents and languages, allowing them to deliver personalized, frictionless experiences that customers love. HEXA uses generative AI to create intelligent voice bots that sound incredibly human, conversing naturally in over 100 languages and dialects to provide an exceptional customer experience. The power of ‘voice’ is expected to take over business processes and customer engagement initiatives.

Technology is available today to build multilingual self-service experiences, but it requires an extra level of craftsmanship. Companies like Haloocom have both proprietary technology and research expertise to help create new self-service experiences for customers in any language.